Safe RL using Adaptive Penalty

Unofficial implementation of the paper - "Conservative and Adaptive Penalty for Model-Based Safe Reinforcement Learning" by Ma et. al.

This project explores the model-based safe RL approach CAP. We formulate the safe RL problem as a constrained Markov Decision process and separately optimise over cost and rewards. CAP adaptively controls the penalty that encourages exploratory behavior with increase in environmental interactions. Our contribution is integrating CAP with Safety GYM environment and introducing a novel optimized gradient approach for policy learning.

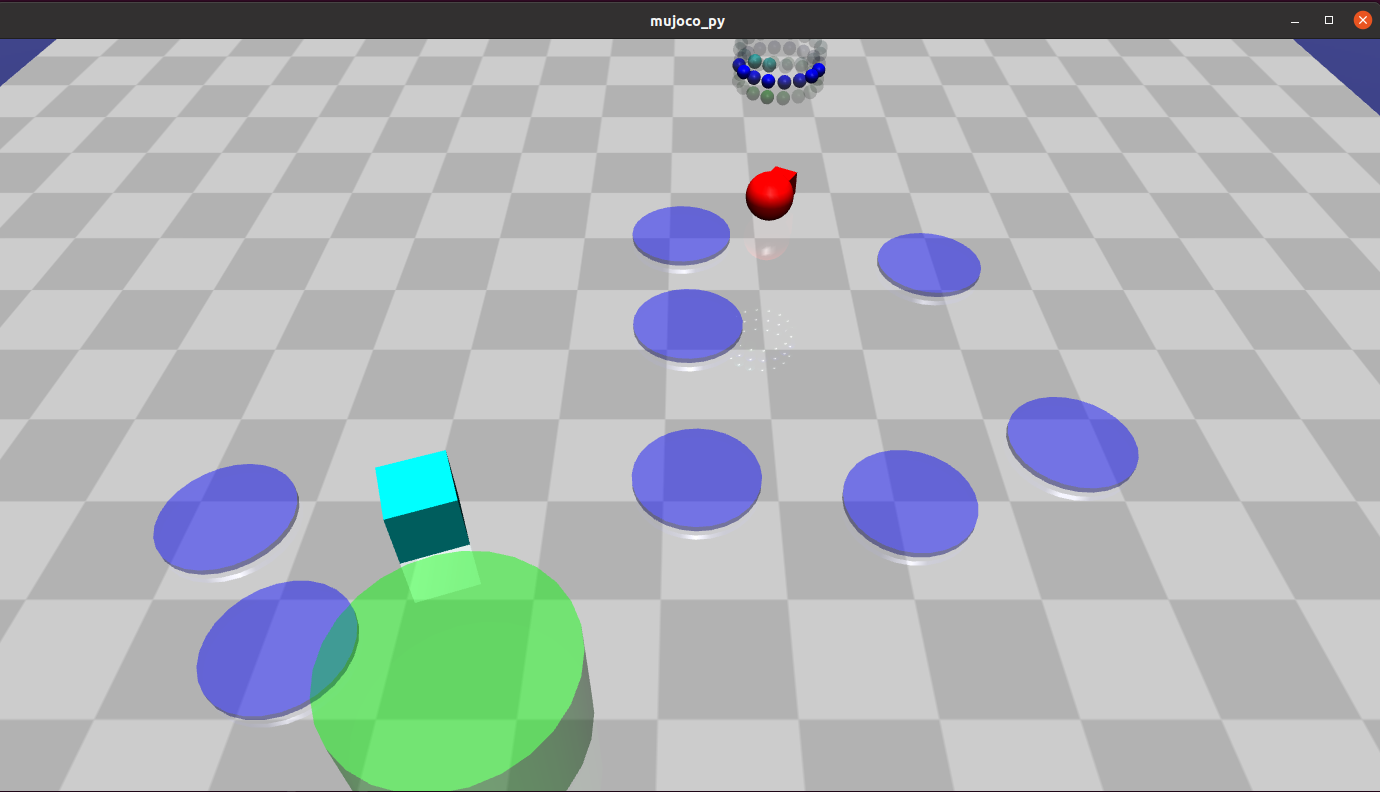

Example of the environment: The agent (red) aims to reach the goal position (green) while avoiding unsafe regions (light and dark blue).

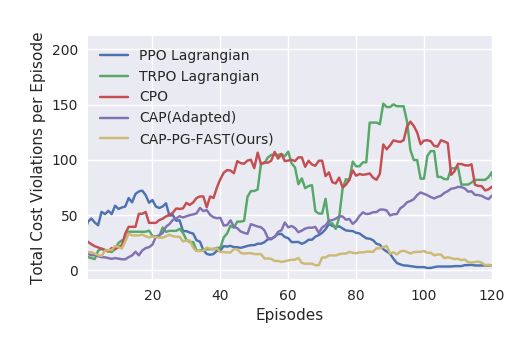

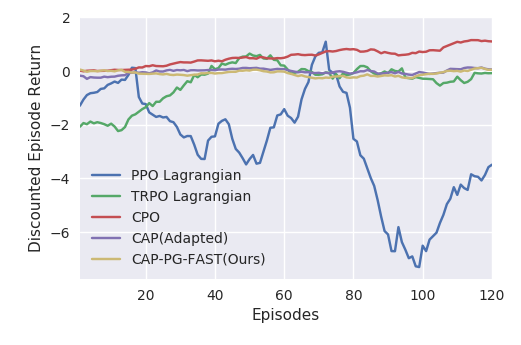

With our experiments we notice lower cost-violations on the point-mass safety-GYM environment.

Left: Episodic cost (less => better). Right: Episodic return (more => better)

The code as well as installation instructions are provided here.